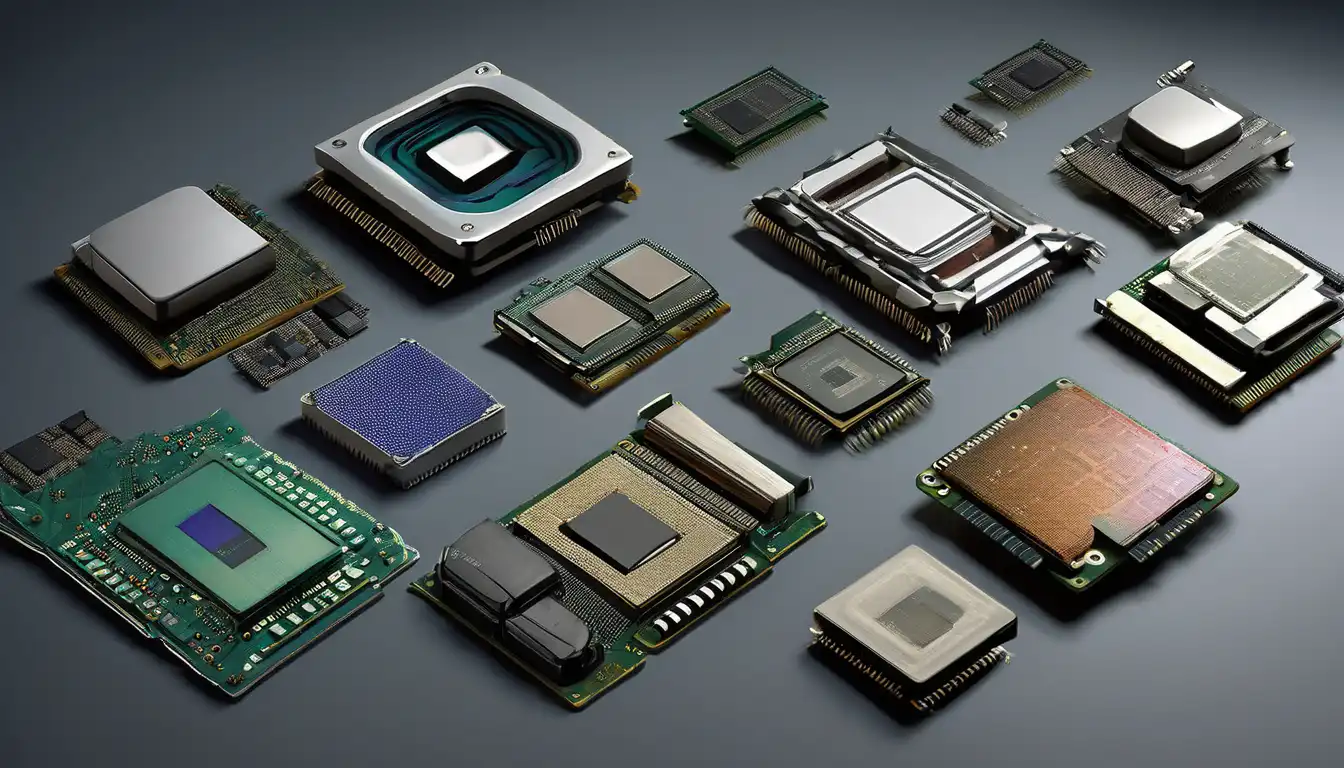

The Dawn of Computing: Early Processor Technologies

The evolution of computer processors represents one of the most remarkable technological journeys in human history. Beginning with massive vacuum tube systems that occupied entire rooms, processors have transformed into microscopic marvels capable of billions of calculations per second. This transformation didn't happen overnight but through decades of innovation, breakthroughs, and relentless pursuit of efficiency.

In the 1940s and 1950s, the first electronic computers used vacuum tubes as their primary processing components. These early processors were enormous, power-hungry, and prone to frequent failures. The ENIAC, completed in 1945, contained approximately 17,468 vacuum tubes and consumed 150 kilowatts of electricity. Despite their limitations, these early systems laid the foundation for modern computing by demonstrating that electronic devices could perform complex calculations.

The Transistor Revolution

The invention of the transistor in 1947 by Bell Labs scientists marked a pivotal moment in processor evolution. Transistors were smaller, more reliable, and consumed significantly less power than vacuum tubes. By the late 1950s, transistors had largely replaced vacuum tubes in new computer designs, enabling more compact and efficient systems.

The transition to transistors allowed for the development of second-generation computers that were more practical for business and scientific applications. IBM's 7000 series and DEC's PDP computers demonstrated the commercial viability of transistor-based systems, paving the way for the computing revolution that would follow.

The Integrated Circuit Era

The next major leap came with the development of integrated circuits in the late 1950s. Jack Kilby at Texas Instruments and Robert Noyce at Fairchild Semiconductor independently developed methods for integrating multiple transistors onto a single silicon chip. This breakthrough allowed for even greater miniaturization and set the stage for the microprocessor revolution.

Integrated circuits enabled the creation of third-generation computers that were smaller, faster, and more affordable than their predecessors. The IBM System/360, introduced in 1964, became one of the most successful computer families in history, demonstrating the power of standardized, integrated circuit-based systems.

The Birth of the Microprocessor

1971 marked a watershed moment with Intel's introduction of the 4004, the world's first commercially available microprocessor. This 4-bit processor contained 2,300 transistors and operated at 740 kHz – modest by today's standards but revolutionary at the time. The 4004 demonstrated that an entire central processing unit could be manufactured on a single chip.

Intel followed this success with the 8008 in 1972 and the groundbreaking 8080 in 1974. These 8-bit processors found applications in early personal computers, calculators, and embedded systems, establishing the microprocessor as the future of computing.

The Personal Computer Revolution

The late 1970s and early 1980s saw processors become increasingly powerful and accessible. Intel's 8086 and 8088 processors, introduced in 1978 and 1979 respectively, formed the foundation of the x86 architecture that would dominate personal computing for decades. IBM's selection of the 8088 for its first PC in 1981 cemented Intel's position as the industry leader.

Competition emerged from companies like Motorola with its 68000 series and Zilog with the Z80, but the x86 architecture gradually became the standard for personal computing. The 1980s also saw the rise of reduced instruction set computing (RISC) architectures, which offered improved performance for certain applications.

The Clock Speed Race

Throughout the 1990s, processor manufacturers engaged in a fierce competition to increase clock speeds. Intel's Pentium processors, introduced in 1993, brought superscalar architecture to mainstream computing, allowing multiple instructions to be executed simultaneously. AMD emerged as a serious competitor with its Athlon processors, challenging Intel's dominance.

By the end of the decade, processors had reached clock speeds of 1 GHz, a milestone that seemed unimaginable just a few years earlier. However, this focus on clock speed eventually hit physical limitations due to power consumption and heat generation issues.

The Multi-Core Revolution

The early 2000s marked a fundamental shift in processor design philosophy. Instead of continuing to push for higher clock speeds, manufacturers began integrating multiple processor cores onto a single chip. This approach allowed for improved performance without corresponding increases in power consumption and heat generation.

Intel's Core 2 Duo processors, introduced in 2006, demonstrated the effectiveness of multi-core architecture for both desktop and mobile computing. AMD followed with its own multi-core designs, intensifying competition and driving innovation. This era also saw the emergence of specialized processors for graphics (GPUs) and mobile devices (SoCs).

Modern Processor Architectures

Today's processors represent the culmination of decades of innovation. Modern CPUs incorporate multiple cores, sophisticated cache hierarchies, and advanced power management features. The distinction between different types of processors has blurred, with CPUs, GPUs, and specialized accelerators often integrated into single packages.

Apple's M-series processors demonstrate how far processor technology has advanced, combining CPU, GPU, and neural processing units on a single chip with exceptional power efficiency. Similarly, AMD's Ryzen processors and Intel's Core series continue to push the boundaries of performance for desktop and server applications.

The Future of Processor Technology

Looking ahead, several emerging technologies promise to shape the next chapter of processor evolution. Quantum computing represents a fundamentally different approach to processing information, potentially solving problems that are intractable for classical computers. Neuromorphic computing, inspired by the human brain, offers new paradigms for artificial intelligence and pattern recognition.

Chiplet architecture, which involves combining multiple specialized chips in a single package, may become the dominant approach for future processors. This modular design allows for better yields, more flexible configurations, and continued performance improvements despite the challenges of further transistor miniaturization.

The evolution of computer processors has been characterized by continuous innovation and adaptation. From vacuum tubes to quantum computing, each generation has built upon the achievements of its predecessors while opening new possibilities for the future. As we stand on the brink of new computing paradigms, the journey of processor technology continues to be one of the most exciting stories in modern science and engineering.

For more information about specific processor architectures, check out our guide to understanding CPU architectures or explore our article on future computing technologies that are shaping the next generation of processors.